Epipolar Transformers

Yihui He, Rui Yan, Katerina Fragkiadaki, Shoou-I Yu (Carnegie Mellon University, Facebook Reality Labs)

CVPR 2020, CVPR workshop Best Paper Award

Oral presentation and human pose demo videos (playlist):

Models

We also provide 2D to 3D lifting network implementations for these two papers:

- 3D Hand Shape and Pose from Images in the Wild, CVPR 2019

configs/lifting/img_lifting_rot_h36m.yaml(Human 3.6M)configs/lifting/img_lifting_rot.yaml(RHD)

- Learning to Estimate 3D Hand Pose from Single RGB Images, ICCV 2017

configs/lifting/lifting_direct_h36m.yaml(Human 3.6M)configs/lifting/lifting_direct.yaml(RHD)

Setup

Requirements

Python 3, pytorch > 1.2+ and pytorch < 1.4

pip install -r requirements.txt

conda install pytorch cudatoolkit=10.0 -c pytorch

Pretrained weights download

mkdir outs

cd datasets/

bash get_pretrained_models.sh

Please follow the instructions in datasets/README.md for preparing the dataset

Training

python main.py --cfg path/to/config

tensorboard --logdir outs/

Testing

Testing with latest checkpoints

python main.py --cfg configs/xxx.yaml DOTRAIN False

Testing with weights

python main.py --cfg configs/xxx.yaml DOTRAIN False WEIGHTS xxx.pth

Visualization

Epipolar Transformers Visualization

- Download the output pkls for non-augmented models and extract under

outs/ - Make sure

outs/epipolar/keypoint_h36m_fixed/visualizations/h36m/output_1.pklexists. - Use

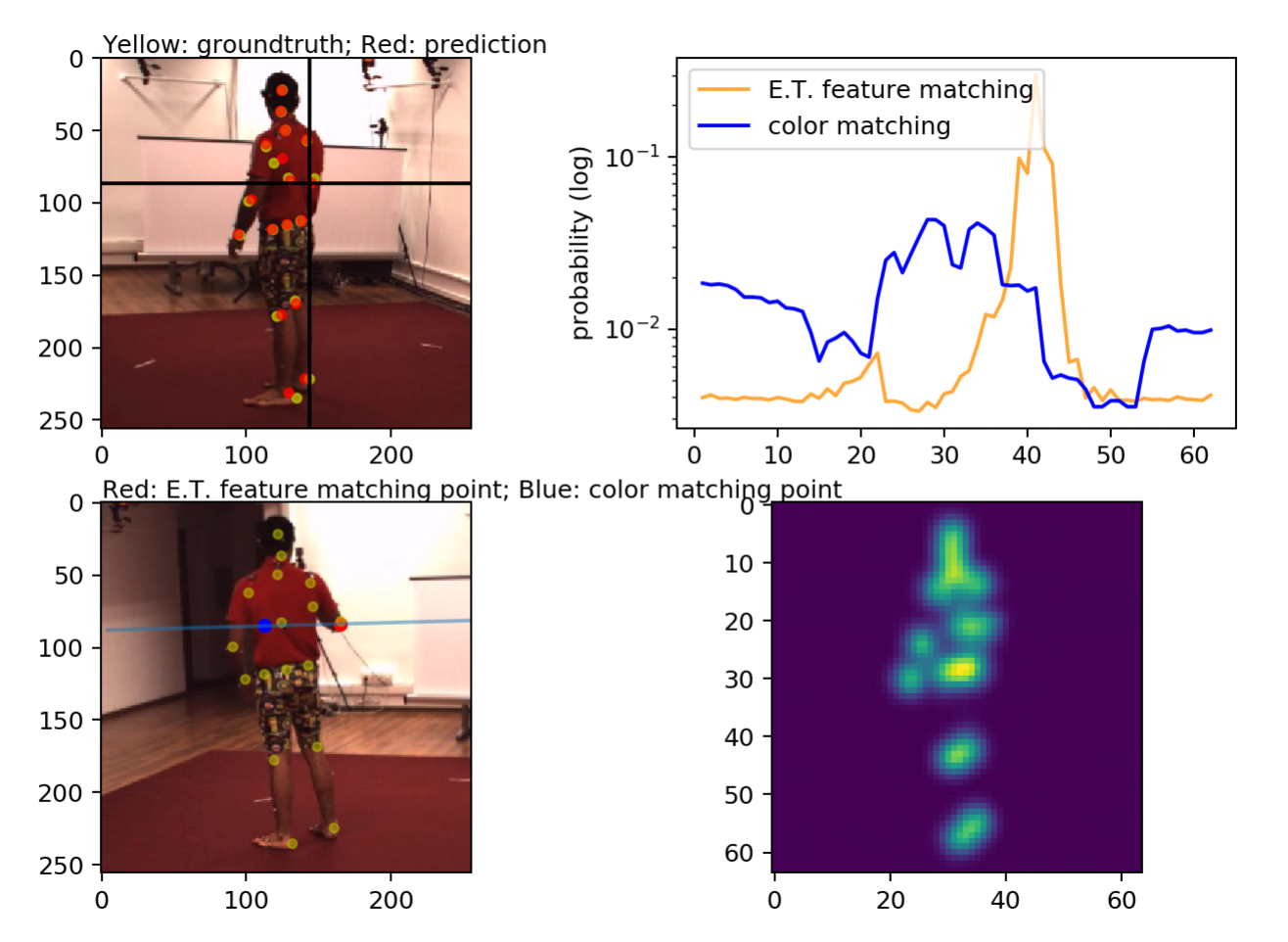

scripts/vis_hm36_score.ipynb- To select a point, click on the reference view (upper left), the source view along with corresponding epipolar line, and the peaks for different feature matchings are shown at the bottom left.

Human 3.6M input visualization

python main.py --cfg configs/epipolar/keypoint_h36m.yaml DOTRAIN False DOTEST False EPIPOLAR.VIS True VIS.H36M True SOLVER.IMS_PER_BATCH 1

python main.py --cfg configs/epipolar/keypoint_h36m.yaml DOTRAIN False DOTEST False VIS.MULTIVIEWH36M True EPIPOLAR.VIS True SOLVER.IMS_PER_BATCH 1

Human 3.6M prediction visualization

# generate images

python main.py --cfg configs/epipolar/keypoint_h36m_zresidual_fixed.yaml DOTRAIN False DOTEST True VIS.VIDEO True DATASETS.H36M.TEST_SAMPLE 2

# generate images

python main.py --cfg configs/benchmark/keypoint_h36m.yaml DOTRAIN False DOTEST True VIS.VIDEO True DATASETS.H36M.TEST_SAMPLE 2

# use https://github.com/yihui-he/multiview-human-pose-estimation-pytorch to generate images for ICCV 19

python run/pose2d/valid.py --cfg experiments-local/mixed/resnet50/256_fusion.yaml # set test batch size to 1 and PRINT_FREQ to 2

# generate video

python scripts/video.py --src outs/epipolar/keypoint_h36m_fixed/video/multiview_h36m_val/

Citing Epipolar Transformers

If you find Epipolar Transformers helps your research, please cite the paper:

@inproceedings{epipolartransformers,

title={Epipolar Transformers},

author={He, Yihui and Yan, Rui and Fragkiadaki, Katerina and Yu, Shoou-I},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={7779--7788},

year={2020}

}

FAQ

Please create a new issue.